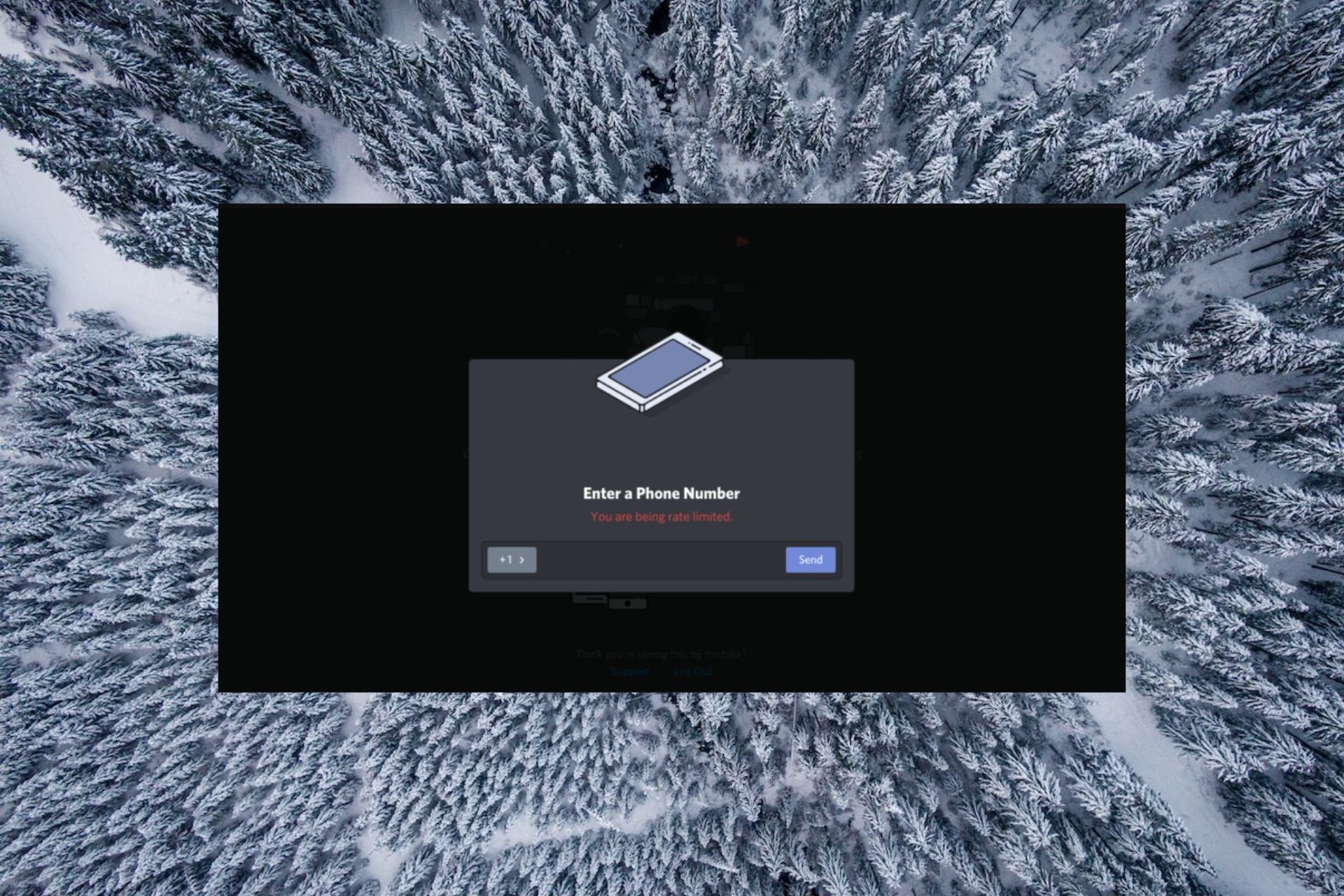

This common issue occurs when a user exceeds the allowed number of requests within a specific timeframe, triggering protective measures to maintain system stability. Rate limiting is a crucial mechanism employed by developers and service providers to ensure fair usage and prevent abuse of resources. In today's digital landscape, where APIs serve as the backbone of countless applications, understanding rate limiting has become essential for both developers and end-users alike. As our digital ecosystem continues to expand, the importance of rate limiting has grown exponentially. From social media platforms to financial services, rate limiting helps maintain optimal performance while protecting sensitive systems from potential threats. When properly implemented, this traffic management technique not only safeguards server resources but also enhances user experience by preventing system overload. The concept of rate limiting affects various aspects of digital interaction, from basic web browsing to complex enterprise-level integrations, making it a fundamental topic for anyone working with digital services. In this comprehensive guide, we'll explore the intricacies of rate limiting, examining its technical foundations, implementation strategies, and real-world applications. We'll delve into how rate limiting works across different platforms, discuss best practices for handling rate-limited situations, and provide practical solutions for optimizing your interactions with rate-limited services. Whether you're a developer seeking to implement effective rate limiting or an end-user looking to understand this common restriction, this article will equip you with the knowledge to navigate rate-limited environments successfully.

Table of Contents

- What is Rate Limiting and Why is it Important?

- Understanding the Technical Aspects of Rate Limiting

- How to Handle Being Rate Limited?

- What are the Best Practices for Rate Limiting?

- What is the Impact of Rate Limiting on Business Operations?

- Future Trends in Rate Limiting Technology

- Essential Tools and Solutions for Managing Rate Limits

- Frequently Asked Questions About Rate Limiting

What is Rate Limiting and Why is it Important?

Rate limiting serves as a crucial mechanism in modern digital infrastructure, acting as a gatekeeper for API traffic and server requests. At its core, rate limiting is a strategy that controls the number of requests a user can make to a server within a specified time period. This practice helps maintain system stability by preventing any single user or application from overwhelming server resources. Imagine a busy highway with multiple lanes - rate limiting functions like a traffic control system, ensuring that all vehicles (requests) can travel smoothly without causing congestion or accidents. The importance of rate limiting extends beyond simple traffic management. In today's interconnected digital ecosystem, where APIs power everything from social media interactions to financial transactions, rate limiting plays a vital role in maintaining security and preventing abuse. By implementing rate limits, service providers can protect their systems from malicious attacks such as Distributed Denial of Service (DDoS) attempts, while also ensuring fair usage among legitimate users. This protection mechanism helps maintain consistent performance levels, even during peak usage periods. From a business perspective, rate limiting offers several significant benefits. It helps control infrastructure costs by preventing excessive resource consumption, enables better capacity planning, and ensures that all users receive equitable access to services. Additionally, rate limiting provides a framework for implementing tiered service levels, allowing businesses to offer different access levels based on user needs and subscription plans. This flexibility helps organizations balance user demands with system capabilities while maintaining optimal performance across their digital platforms.

Understanding the Technical Aspects of Rate Limiting

Common Rate Limiting Algorithms

Several algorithms form the foundation of effective rate limiting implementations, each with its unique characteristics and use cases. The Token Bucket algorithm operates by allocating a certain number of tokens to users, with each request consuming one token. When a user exhausts their tokens, they must wait for the bucket to refill at a predetermined rate. This method provides a smooth request distribution pattern and is particularly effective for applications requiring consistent performance levels. The Leaky Bucket algorithm, while similar in concept, functions more like a queue system. Requests enter a fixed-capacity bucket and are processed at a constant rate, regardless of incoming traffic spikes. This approach helps smooth out bursts of traffic but may lead to request drops during sustained high-load periods. Another popular method, the Fixed Window Counter, divides time into fixed intervals and tracks request counts within each window. While simple to implement, this approach can sometimes lead to "bursty" traffic patterns at window boundaries.

Read also:The Rise Of Nfl Peso Pluma A New Era In Sports

Implementation Techniques Across Platforms

Different platforms and services employ rate limiting in various ways, tailored to their specific needs and architecture. Cloud providers like AWS and Google Cloud utilize sophisticated rate limiting mechanisms across their API ecosystems, often combining multiple algorithms to achieve optimal performance. These implementations typically include features such as automatic scaling, dynamic threshold adjustments, and machine learning-based anomaly detection to handle complex traffic patterns. Social media platforms implement rate limiting to manage API access for developers while maintaining platform stability. Twitter, for example, employs a combination of user-based and application-based rate limits, with different thresholds for various API endpoints. This granular approach allows for precise control over resource allocation while providing developers with clear usage guidelines. Similarly, financial institutions implement strict rate limiting protocols to protect sensitive transactions while maintaining regulatory compliance. Modern implementation techniques often incorporate additional layers of sophistication, such as geographic-based rate limiting, user behavior analysis, and real-time traffic monitoring. These advanced methods help service providers maintain optimal performance while adapting to changing usage patterns and emerging security threats. The evolution of rate limiting technology continues to push boundaries, incorporating artificial intelligence and machine learning to create more intelligent and adaptive traffic management systems.

How to Handle Being Rate Limited?

Encountering rate limits can be frustrating, but with the right strategies, you can effectively manage these situations and maintain smooth operations. The first step in handling rate limiting is to thoroughly understand the specific limitations imposed by the service you're interacting with. Most platforms provide detailed documentation outlining their rate limit policies, including threshold values, reset intervals, and any available mechanisms for increasing limits. Carefully reviewing this information helps you develop an informed approach to managing your request patterns. When faced with rate limits, implementing exponential backoff strategies can prove highly effective. This technique involves gradually increasing the time between retry attempts, starting with a short delay and progressively extending it after each unsuccessful attempt. For example, you might start with a 1-second delay, then 2 seconds, 4 seconds, and so on, up to a maximum threshold. This approach not only helps avoid triggering additional rate limits but also reduces unnecessary server load during high-traffic periods. Several tools and frameworks can assist in managing rate-limited environments. The popular HTTP client library Axios, for instance, includes built-in support for retry logic and exponential backoff strategies. Similarly, specialized libraries like Bottleneck for Node.js provide robust rate limiting capabilities, allowing developers to implement sophisticated throttling mechanisms with minimal effort. These tools often include features for monitoring usage patterns, tracking remaining quota, and automatically adjusting request rates based on real-time feedback from the server.

What are the Best Practices for Rate Limiting?

Top Tips for Developers

Developers implementing rate limiting must consider several key factors to ensure effective traffic management while maintaining optimal user experience. First and foremost, it's crucial to design rate limiting systems with clear, predictable behavior. This includes establishing consistent response codes for rate-limited requests, typically using HTTP 429 (Too Many Requests) status codes, and providing detailed headers indicating remaining quota and reset times. The Retry-After header, for instance, should specify the exact duration before the user can make additional requests, helping clients adjust their behavior accordingly. When implementing rate limits, developers should adopt a layered approach that combines multiple protection mechanisms. This might include combining basic rate limiting with more sophisticated measures such as behavioral analysis and anomaly detection. For example, while a basic rate limit might restrict users to 100 requests per minute, additional layers could monitor for unusual patterns, such as requests originating from multiple IP addresses but using the same API key. This multi-layered approach helps catch sophisticated abuse attempts while maintaining fair access for legitimate users.

Strategies for End-Users

For end-users navigating rate-limited environments, several strategies can help maintain productivity while respecting system constraints. One effective approach is to implement request batching, where multiple operations are combined into a single API call when possible. This technique not only helps conserve rate limit quota but also reduces overall network overhead. For instance, instead of making individual requests for each data point, users can often retrieve multiple records in a single call using appropriate query parameters. Caching represents another powerful tool for managing rate limits. By storing frequently accessed data locally and implementing appropriate expiration policies, users can significantly reduce the number of required API calls. When implementing caching strategies, it's important to consider both time-based expiration and cache invalidation mechanisms to ensure data remains current while maximizing cache utilization. Additionally, implementing intelligent request scheduling can help distribute traffic more evenly throughout the day, avoiding peak usage periods and potential rate limit issues.

What is the Impact of Rate Limiting on Business Operations?

Rate limiting exerts a significant influence on various aspects of business operations, particularly in the realm of digital services and API-driven ecosystems. From a financial perspective, well-implemented rate limiting can lead to substantial cost savings by preventing infrastructure overutilization and reducing the need for emergency scaling. Companies that effectively manage their rate limiting policies often experience improved resource allocation, allowing them to maintain optimal performance levels while keeping operational expenses under control. This cost efficiency extends to cloud computing resources, where precise traffic management can prevent unnecessary auto-scaling events and associated costs. The impact on customer experience, however, presents a more nuanced picture. While rate limiting helps maintain system stability and prevents service degradation during peak periods, overly restrictive policies can frustrate users and hinder business growth. Studies show that approximately 60% of users who encounter frequent rate limits may seek alternative services, highlighting the delicate balance businesses must strike between protection and accessibility. To mitigate negative customer experiences, many organizations implement tiered rate limiting strategies, offering basic access to all users while providing enhanced quotas for premium customers or enterprise clients. Rate limiting also plays a crucial role in maintaining regulatory compliance, particularly in industries handling sensitive data or financial transactions. By controlling access patterns and monitoring usage metrics, businesses can demonstrate adherence to security standards and data protection regulations. This compliance aspect often translates into increased trust from both customers and regulatory bodies, potentially opening doors to new business opportunities. Furthermore, the data collected through rate limiting mechanisms can provide valuable insights into user behavior and system performance, enabling data-driven decision-making and strategic planning.

Future Trends in Rate Limiting Technology

The future of rate limiting technology promises exciting advancements driven by artificial intelligence and machine learning innovations. Emerging adaptive rate limiting systems are beginning to incorporate sophisticated predictive analytics, allowing them to dynamically adjust thresholds based on real-time traffic patterns and historical data. These intelligent systems can identify and respond to unusual traffic spikes more effectively than traditional static rate limiting approaches, while simultaneously learning to distinguish between legitimate usage spikes and potential abuse attempts. The integration of blockchain technology presents another promising development in rate limiting evolution. Decentralized rate limiting mechanisms could offer more transparent and tamper-proof usage tracking, potentially enabling cross-platform quota management and more equitable resource distribution. This approach might prove particularly valuable in emerging decentralized applications and Web3 ecosystems, where traditional centralized rate limiting methods may prove inadequate. Additionally, the rise of edge computing is reshaping rate limiting strategies, with more processing and traffic management occurring closer to end-users, reducing latency and improving overall system responsiveness.

Read also:Milly Shapiro A Rising Star In Hollywood Biography Career And Achievements

Essential Tools and Solutions for Managing Rate Limits

Managing rate limits effectively requires a combination of specialized tools and strategic solutions tailored to different operational needs. Popular rate limiting frameworks like Kong API Gateway and NGINX offer robust features for implementing sophisticated traffic management policies. These solutions provide comprehensive dashboards for monitoring usage patterns, setting custom rate limits, and analyzing traffic statistics. For instance, Kong's rate limiting plugin allows administrators to define limits based on various parameters, including API keys, IP addresses, and user roles, while offering real-time analytics and alerting capabilities. Cloud-based solutions have emerged as powerful tools for managing rate limits across distributed systems. Services like AWS WAF (Web Application Firewall) and Google Cloud Armor offer integrated rate limiting features that work seamlessly with their broader security and traffic management ecosystems. These platforms provide advanced capabilities such as geographic-based rate limiting, automated scaling of rate limits based on traffic patterns, and integration with machine learning-based threat detection systems. Additionally, specialized rate limiting services like RateLimit.io offer dedicated solutions for managing API traffic, featuring sophisticated algorithms and real-time monitoring capabilities.

Frequently Asked Questions About Rate Limiting

What happens when you exceed rate limits?

When you exceed rate limits, the server typically responds with an HTTP 429 status code, indicating too many requests. The response usually includes headers specifying when you can make additional requests and how long you need to wait before